Homework #

Finite element methods (FEM) 1 #

Table of Contents#

%precision 3

%matplotlib inline

import numpy as np

import matplotlib.pyplot as plt

import scipy.linalg as sl

import scipy.sparse as sp

import scipy.sparse.linalg as spla

# the following allows us to plot triangles indicating convergence order

from mpltools import annotation

# as we're in 2D we will be doing some 3D plotting

from mpl_toolkits.mplot3d import Axes3D

# and using some colormaps

from matplotlib import cm

# and we will create some animations!

import matplotlib.animation as animation

from IPython.display import HTML

from pprint import pprint

# some useful functions from the lecture

def hat_function(i, x_nodes):

""" Top hat basis function at node i.

Returns a function for later use in quadrature rules.

"""

assert(i>=0 and i<=len(x_nodes)-1)

dx = np.diff(x_nodes)

if i==0:

hat = lambda x: np.select([ x<=x_nodes[i+1] ], [ (x_nodes[i+1] - x)/dx[i] ] ,0.0)

elif i==len(x_nodes)-1:

hat = lambda x: np.select([ x>=x_nodes[i-1] ], [ (x - x_nodes[i-1])/dx[i-1] ] ,0.0)

else:

hat = lambda x: np.select(

[ np.all([[x>=x_nodes[i-1]],[x<=x_nodes[i]]], axis=0)[0],

np.all([[x>=x_nodes[i]],[x<=x_nodes[i+1]]], axis=0)[0]] ,

[ (x-x_nodes[i-1])/dx[i-1], (x_nodes[i+1]-x)/dx[i] ] ,0.0)

return hat

def hat_function_deriv(i, x_nodes):

""" Top hat basis function derivative at node i.

Returns a function for later use in quadrature rules.

"""

assert(i>=0 and i<=len(x_nodes)-1)

dx = np.diff(x_nodes)

if i==0:

hat = lambda x: np.select([ x<=x_nodes[i+1] ], [ -1/dx[i] ] ,0.0)

elif i==len(x_nodes)-1:

hat = lambda x: np.select([ x>=x_nodes[i-1] ], [ 1/dx[i-1] ] ,0.0)

else:

hat = lambda x: np.select(

[ np.all([[x>=x_nodes[i-1]],[x<=x_nodes[i]]], axis=0)[0],

np.all([[x>=x_nodes[i]],[x<=x_nodes[i+1]]], axis=0)[0]] ,

[ 1/dx[i-1], -1/dx[i] ] ,0.0)

return hat

def mass_matrix(x):

dx = np.diff(x)

M = np.zeros((len(x), len(x)))

M[1:-1,1:-1] = np.diag(dx[:-1]/3 + dx[1:]/3 , k=0)

M += np.diag(dx/6 , k=-1) + np.diag(dx/6 , k=1)

M[0,0] = dx[0]/3

M[-1,-1] = dx[-1]/3

return M

# don't need the composite version of Simpson's rule here - want the version

# that is able to integrate over a single interval and evaluates the function

# at the two end points as well as the mid point

def simpsons_rule(a, b, function, number_intervals=10):

""" Function to evaluate Simpson's rule.

Note that this implementation takes the function as an argument,

and evaluates this at the midpoint of subintervals in addition to the

end point. Hence additional information is generated and used through

additional function evaluations.

This is different to the function/implementation available with SciPy

where discrete data only is passed to the function.

Bear this in mind when comparing results - there will be a factor of two

in the definition of "n" we need to be careful about!

Also note that this version of the function performs redundant function

evaluations - see the **composite** implementation below.

"""

interval_size = (b - a)/number_intervals

assert interval_size > 0

assert type(number_intervals) == int

I_S = 0.0

# Loop to valuate Simpson's formula over each interval

for i in range(number_intervals):

# Find a, c, and b

this_bin_start = a + interval_size * (i)

this_bin_mid = this_bin_start + interval_size/2

this_bin_end = this_bin_start + interval_size

# Calculate the rule and add to running total.

I_S += (interval_size/6) * (function(this_bin_start) +

4 * function(this_bin_mid) + function(this_bin_end))

# Return our running total result

return I_S

def simpsons_composite_rule(a, b, function, number_intervals=10):

"""Function to evaluate the composite Simpson's rule only using

function evaluations at (number_intervals + 1) points.

This implementation requires that the number of subintervals (number_intervals) be even

"""

assert number_intervals % 2 == 0, "number_intervals is not even"

interval_size = (b - a) / number_intervals

# start with the two end member values

I_cS2 = function(a) + function(b)

# add in those terms with a coefficient of 4

for i in range(1, number_intervals, 2):

I_cS2 += 4 * function(a + i * interval_size)

# and those terms with a coefficient of 2

for i in range(2, number_intervals-1, 2):

I_cS2 += 2 * function(a + i * interval_size)

return I_cS2 * (interval_size / 3.0)

# now a function to reconstruct the p/w linear function

def pwlinear(x_nodes, y_nodes, x):

"""Function to return f_h(x) at locations given in x,

with top hat functions on a mesh given by x_nodes, and

corresponding weights given in y_nodes.

"""

val = np.zeros_like(x)

# loop over x values, equivalently the basis functions

for i in range(len(x)):

# which mesh node is directly to the left of x[i] ?

node = np.where( x[i] >= x_nodes )[0][-1]

# because of the greater than or equals above, deal with problem of hitting last node

node = np.minimum(len(x_nodes)-2,node)

# add together the contributions from the two hat functions at this x location -

# the hat functions centred on "node" (to the left)

phi_node = hat_function(node, x_nodes)

# and "node+1" (to the right)

phi_node_plus_1 = hat_function(node+1, x_nodes)

# now take the weighted sum of these two hat functions

val[i] = y_nodes[node]*phi_node(x[i]) + y_nodes[node+1]*phi_node_plus_1(x[i])

return val

Some more complex BVP solutions using the FEM#

We now go through some further examples of solving BVPs with more complex RHS and/or BCs.

The following is just here as a read through of some more complex cases - there aren’t specific questions to answer.

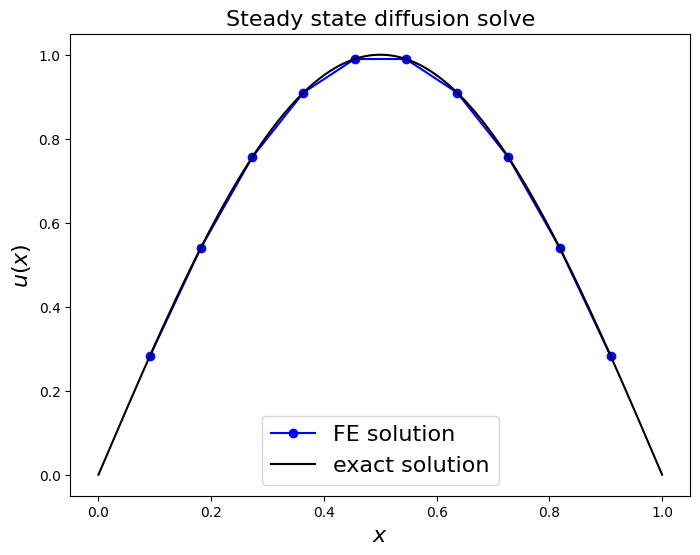

An example with a more complex RHS with homogeneous Dirichlet BCs [\(\star\)]#

Now let’s consider an example of a problem with a more complex \(f\) and hence solution.

The complexity of \(f\) means we have more to do when evaluating the RHS vector, but still having homogeneous Dirichlet BCs means we don’t have to change the LHS.

# Note that this is essentially an MMS - we define the solution first that satisfies the BCs

# and then substitute into the BVP to find the appropriate RHS source term

def u_exact(x):

return np.sin(np.pi*x)

def f(x):

# just -u''

return (np.pi**2)*np.sin(np.pi*x)

N_elements_CG = 11

N_nodes_CG = N_elements_CG + 1

x_nodes = np.linspace(0, 1 , N_nodes_CG )

dx = np.diff(x_nodes)

# space to store our discretisaton matrices

K = np.zeros((N_nodes_CG - 2, N_nodes_CG - 2))

RHS = np.zeros(N_nodes_CG - 2)

K = np.diag(1. / dx[:-1] + 1. / dx[1:] , k=0) \

- np.diag(1. / dx[1:-1] , k=-1) \

- np.diag(1. / dx[1:-1] , k=1)

# form RHS vector by integrating function f multiplied by basis function

# loop over internal nodes

for node in range(1, N_nodes_CG - 1):

# and integrate our function multiplied by the corresponding cts basis function

phi_i = hat_function(node, x_nodes)

integrand = lambda x : f(x) * phi_i(x)

RHS[node-1] = simpsons_rule(x_nodes[node-1], x_nodes[node+1], integrand, 2 )

# NB. if we increase the number of intervals we can get arbitrarily

# small errors at the nodes

# now we have our equation for the unknowns (K @ u=RHS) solve for u:

u = sl.solve(K,RHS)

# plot the result

fig = plt.figure(figsize=(8, 6))

ax1 = fig.add_subplot(111)

ax1.set_xlabel('$x$', fontsize=16)

ax1.set_ylabel('$u(x)$', fontsize=16)

ax1.set_title('Steady state diffusion solve', fontsize=16)

ax1.plot(x_nodes[1:-1], u, 'bo-', label='FE solution')

x_fine = np.linspace(0, 1, 1000)

ax1.plot(x_fine, u_exact(x_fine), 'k-', label='exact solution')

ax1.legend(loc='best', fontsize=16);

print('error (RMS at nodes only) = ',

sl.norm(u - u_exact(x_nodes[1:-1]) )

/np.sqrt(len(u)) )

error (RMS at nodes only) = 5.160570601460748e-06

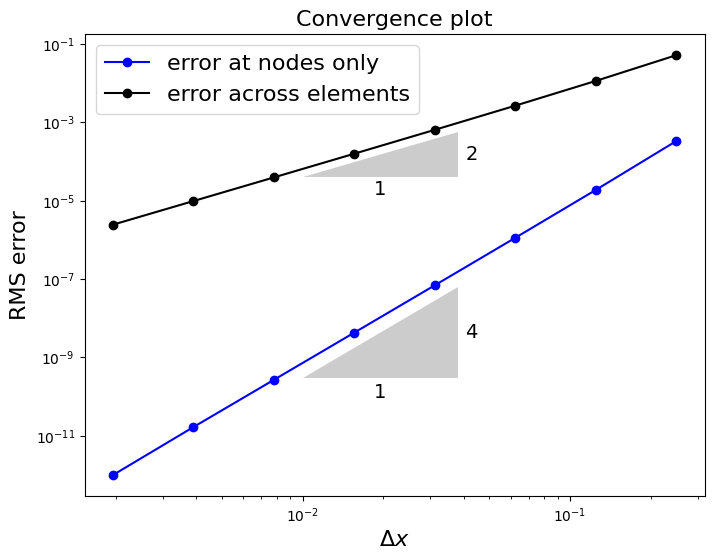

A convergence analysis#

Let’s check how the error converges with \(\Delta x\).

We will compute the RMS error at the nodes only (as above), but will also evaluate both the exact solution and the p/w linear numerical approximation on a finer mesh and compute the RMS error of the difference - this will be an approximation to the L2 error.

def u_exact(x):

return np.sin(np.pi*x)

def f(x):

# just -u''

return (np.pi**2)*np.sin(np.pi*x)

# some meshes of increasingly finer resolution to loop over

Ns = [2**p for p in range(2, 10)]

errors = np.zeros((2,len(Ns)))

for i, N in enumerate(Ns):

N_elements_CG = N

N_nodes_CG = N_elements_CG + 1

x_nodes = np.linspace(0, 1 , N_nodes_CG )

dx = np.diff(x_nodes)

# space to store our discretisaton matrices

K = np.zeros((N_nodes_CG - 2, N_nodes_CG - 2))

RHS = np.zeros(N_nodes_CG - 2)

K = np.diag(1. / dx[:-1] + 1. / dx[1:] , k=0) \

- np.diag(1. / dx[1:-1] , k=-1) \

- np.diag(1. / dx[1:-1] , k=1)

# form RHS vector by integrating function f multiplied by basis function

# loop over internal nodes

for node in range(1, N_nodes_CG - 1):

# and integrate our function multiplied by the corresponding cts basis function

phi_i = hat_function(node, x_nodes)

integrand = lambda x : f(x) * phi_i(x)

RHS[node-1] = simpsons_rule(x_nodes[node-1], x_nodes[node+1], integrand, 2 )

u = sl.solve(K,RHS)

# RMS error of the nodal errors - cf. comments in main lecture text

errors[0,i] = sl.norm(u - u_exact(x_nodes[1:-1])) / np.sqrt(len(u))

# now let's do the RMS on a fine mesh to approximate the L2 norm

x_fine = np.linspace(x_nodes[1],x_nodes[-2],1000)

errors[1,i] = sl.norm(pwlinear(x_nodes[1:-1], u, x_fine) - u_exact(x_fine)) \

/ np.sqrt(len(x_fine))

fig = plt.figure(figsize=(8, 6))

ax1 = fig.add_subplot(111)

ax1.set_xlabel('$\Delta x$', fontsize=16)

ax1.set_ylabel('RMS error', fontsize=16)

ax1.set_title('Convergence plot', fontsize=16)

ax1.loglog(1./np.array(Ns), errors[0,:], 'bo-', label='error at nodes only')

ax1.loglog(1./np.array(Ns), errors[1,:], 'ko-', label='error across elements')

ax1.legend(loc='best', fontsize=16)

annotation.slope_marker((1e-2, 4e-5), (2, 1), ax=ax1,

size_frac=0.25, pad_frac=0.05, text_kwargs = dict(fontsize = 14))

annotation.slope_marker((1e-2, 3e-10), (4, 1), ax=ax1,

size_frac=0.25, pad_frac=0.05, text_kwargs = dict(fontsize = 14))

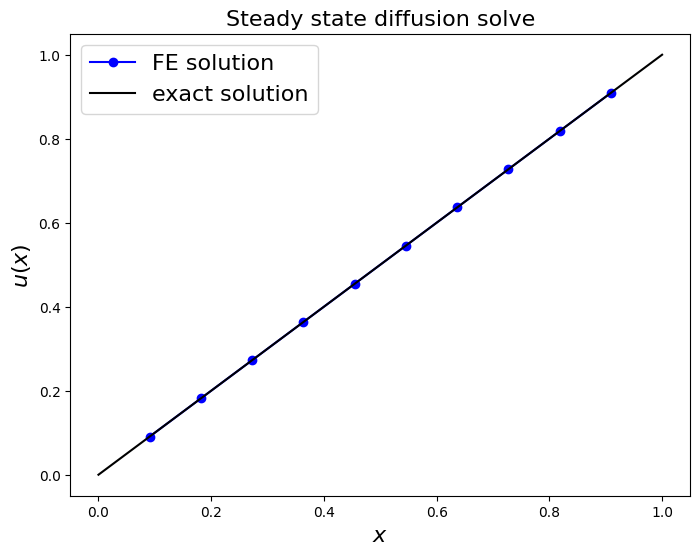

Dealing with more complex BCs (Inhomogeneous Dirichlet) [\(\star\)]#

Now let’s see what we need to do in the case that the BCs are non-zero.

Problem description#

Now let’s consider a BVP problem we considered in a previous lecture (specifically the diffusion only case with fixed values at the end points - recall the exact solution to the problem was a linear that joined the two boundary condition values).

Find \(u\equiv u(x)\) which satisfies the ODE over the interval \([0,1]\)

for a given source term \(\,f\), with inhomogeneous Dirichlet boundary conditions:

We’ll seek to code up the general case, although to match L7 we are interested in a case with \(\,f\equiv 0\,\), \(\,u_L=0\,\) and \(\,u_R=1\).

Finite element approximation#

In this case we write our solution as

where \(\phi_0\) and \(\phi_n\) are defined as per the other basis functions (i.e. \(\phi_0\) is unity at node 0 (the boundary) and zero elsewhere), and we choose \(u_0=u_L\) and \(u_N=u_R\). These additional components are hence essentially interpolating the prescribed boundary data.

So we are still actually solving for the internal degrees of freedom: \(u_j\), for \(j=1,\ldots,N-1\).

This means that it is still appropriate for us to use the reduced space \(V_h^0\) to test our solution against, i.e. we again ignore the boundary terms in the weak form and ask that

Note a difference now though that our \(u_h\) does include known \(\phi_0 \) and \(\phi_n\) terms. Let’s see now how this affects things.

In this case the integral in the LHS can be written as

Note that we will move the (two) boundary term contributions on to the right hand side as they are known.

We explain in the code that follows how these additional contributions are added into the RHS vector.

Our left hand side “discretisation” matrix is the same \((N-1)\times (N-1)\) matrix as above (the original full \(K\) without the first and last rows)

while the RHS vector has entries

Our discretised system is again \(\,K\boldsymbol{u} = \boldsymbol{b}\).

If we solve this matrix equation for the coefficients \(\boldsymbol{u}\) then we have a numerical solution to our problem.

uL = 0.

uR = 1.

def f(x):

return 0.0

def u_exact(x):

return uL + x*(uR-uL)

N_elements_CG = 11

N_nodes_CG = N_elements_CG + 1

x_nodes = np.linspace(0, 1 , N_nodes_CG )

dx = np.diff(x_nodes)

# space to store our discretisaton matrices

K = np.zeros((N_nodes_CG - 2, N_nodes_CG - 2))

RHS = np.zeros(N_nodes_CG - 2)

K = np.diag(1. / dx[:-1] + 1. / dx[1:] , k=0) \

- np.diag(1. / dx[1:-1] , k=-1) \

- np.diag(1. / dx[1:-1] , k=1)

# form RHS vector by integrating function f multiplied by basis function

# loop over internal nodes

for node in range(1, N_nodes_CG - 1):

# and integrate our function multiplied by the corresponding cts basis function

phi_i = hat_function(node, x_nodes)

integrand = lambda x : f(x) * phi_i(x)

# simpsons with number intervals = 2 can integrate this exactly

RHS[node-1] = simpsons_rule(x_nodes[node-1], x_nodes[node+1], integrand, 2 )

# Add in the extra entries due to the inhomogeneous BCs

# At the left the integral is only non-zero when d(phi_i)/dx and d(phi_0)/dx both have

# support - this only occurs over the first element when i=1 (remembering that i=0

# isn't in our trial space. The integrand is then the value u(0) = uL

# multiplied by the derivative of \phi_1 in the first cell (1/dx[0])

# multiplied by the derivative of \phi_0 in the first cell (-1/dx[0]).

# Overall this is a constant, and hence to do the integral we just need to

# multiply it by the size of the cell (dx[0]), and subtract the result from the

# current RHS entry for this node:

RHS[0] = RHS[0] - (uL * 1/dx[0] * (-1/dx[0]))*dx[0]

# and similarly for the RHS - we need to update the final entry

RHS[-1] = RHS[-1] - (uR * (-1/dx[-1]) * (1/dx[-1]))*dx[-1]

# now solve

u = sl.solve(K,RHS)

fig = plt.figure(figsize=(8, 6))

ax1 = fig.add_subplot(111)

ax1.set_xlabel('$x$', fontsize=16)

ax1.set_ylabel('$u(x)$', fontsize=16)

ax1.set_title('Steady state diffusion solve', fontsize=16)

ax1.plot(x_nodes[1:-1], u, 'bo-', label='FE solution')

x_fine = np.linspace(0, 1, 1000)

ax1.plot(x_fine, u_exact(x_fine), 'k-', label='exact solution')

ax1.legend(loc='best', fontsize=16);

print('error (RMS at nodes only) = ',

sl.norm(u - u_exact(x_nodes[1:-1]) )

/np.sqrt(len(u)) )

error (RMS at nodes only) = 3.142637229273806e-16

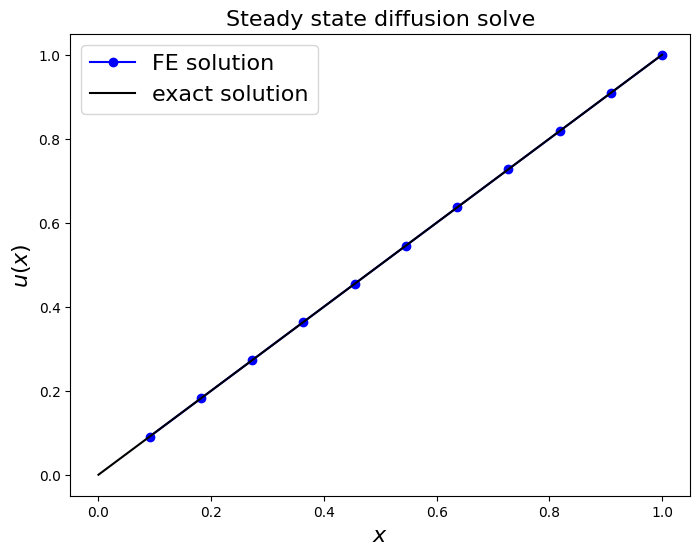

Dealing with more complex BCs (Neumann conditions) [\(\star\)]#

One of the big advantages of the FEM is that realistic (i.e. physically meaningful) BCs are relatively easy to incorporate.

Let’s consider Neumann conditions (i.e. specifying the value of the derivative at the boundaries) which will see can naturally be incorporated into our FEM formulation, but in a very different way to how we deal with Dirichlet conditions.

Let’s actually consider a problem with one Dirichlet and one Neumann BC.

Problem description#

Find \(u\equiv u(x)\) which satisfies the ODE over the interval \([0,1]\)

for a given source term \(\,f\), with an inhomogeneous Dirichlet boundary condition at the left and an inhomogeneous Nemuann BC at the right:

NB. for this particular ODE problem specifying two Neumann conditions yields an ill-posed problem - why is this?

Variational form of problem#

In this case we integrate by parts

and keep the boundary integral on those parts of the domain where the Neumann condition is applied (we drop the boundary contribution at the Dirichlet boundary for the same reasons as in the previous example):

where we have substituted in our known Neumann data: \(\,u'(1)= u^N_{R}\) and used the fact that we enforce the Dirichlet with test functions with the property that \(v(0)=0\).

Aside: homogeneous Neumann boundary conditions#

Note that the homogeneous form of Neumann conditions are especially easy to deal with, we simply do not perform the boundary integration.

Doing nothing is the correct implementation of the boundary condition for the correct underlying problem (i.e. variational) formulation.

So if in our very first example where we applied homogeneous Dirichlet BCs by actually solving over \(i,j = 1,\ldots, N-1\). If we had solved for \(i,j = 0,\ldots, N\) while ignoring the boundaries, we would have effectively solved a version of the problem with homogeneous Neumann BCs (which would have led to a singular matrix for this particular problem due to this issue with two homogeneous Neumann conditions explained above).

Finite element approximation#

In this case we write our solution as

Note that explicit here is that fact that we don’t know our solution at the right hand boundary (and hence we need to solve for \(u_N\)). We thus strip out the \(u_0\) term and define our test function space correspondingly to be that spanned by \(\phi_i\) for \(i=1, 2,\ldots, N\). We will thus end up solving in this case a \(N\times N\) system.

Our variational form is in this case

\(\delta_{i,n}\) is the Kronecker delta which is equal to one when \(i=n\), otherwise it is zero. This is introduced here simply to emphasise that the Neumann boundary data contributes as a boundary integral at the right hand boundary node (i.e. \(i=n\)) only.

In this case the integral in the LHS can be written as

As before we will move the boundary Dirichlet contribution over to the right hand side as it is known.

Our “discretisation” matrix \(K\) in this case keep the final row of the full \(K\) we originally defined, but does not include the first row.

and

So now in this case we have to add into \(\boldsymbol{b}\) a term corresponding to the Dirichlet condition, as well as the Neumann condition. We explained how to deal with the Dirichlet term in a previous example. As we will see in the code below adding in the Neumann term is especially easy.

uL = 0.

uRn = 1.

def f(x):

return 0.0

def u_exact(x):

return uL + uRn*x

N_elements_CG = 11

N_nodes_CG = N_elements_CG + 1

x_nodes = np.linspace(0, 1 , N_nodes_CG )

dx = np.diff(x_nodes)

# space to store our discretisaton matrices

K = np.zeros((N_nodes_CG - 1, N_nodes_CG - 1))

RHS = np.zeros(N_nodes_CG - 1)

K[:-1,:-1] = np.diag(1. / dx[:-1] + 1. / dx[1:] , k=0) \

- np.diag(1. / dx[1:-1] , k=-1) \

- np.diag(1. / dx[1:-1] , k=1)

# add in the extra bottom right entries

K[-1,-1] = 1. / dx[-1]

K[-1,-2] = -1. / dx[-1]

K[-2,-1] = -1. / dx[-1]

# form RHS vector by integrating function f multiplied by basis function

# loop over internal nodes

for node in range(1, N_nodes_CG-1):

# and integrate our function multiplied by the corresponding cts basis function

phi_i = hat_function(node, x_nodes)

integrand = lambda x : f(x) * phi_i(x)

# simpsons with number intervals = 2 can integrate this exactly

RHS[node-1] = simpsons_rule(x_nodes[node-1], x_nodes[node+1], integrand, 2 )

# need to add in the right hand boundary contribution to the RHS vector

# only contribution comes from the final element

phi_i = hat_function(N_nodes_CG-1, x_nodes)

integrand = lambda x : f(x) * phi_i(x)

RHS[-1] = simpsons_rule(x_nodes[-2], x_nodes[-1], integrand, 1 )

# Add in the extra entries due to the inhomogeneous BCs

# At the left the integral is only non-zero when phi_i and phi_0 both have

# support - this only occurs over the first element. The integrand is then

# uL multiplied by the derivative of \phi_1 in the first cell (1/dx[0])

# multiplied by the derivative of \phi_0 in the first cell (-1/dx[0]).

# This is a constant, and hence to do the integral we just need to

# multiply by the size of the cell (dx[0]):

RHS[0] = RHS[0] - (uL * 1/dx[0] * (-1/dx[0]))*dx[0]

# And finally, now also add a Neumann condition at the right -

# note we're perform a boundary integral here

RHS[-1] = RHS[-1] + uRn

u = sl.solve(K,RHS)

fig = plt.figure(figsize=(8, 6))

ax1 = fig.add_subplot(111)

ax1.set_xlabel('$x$', fontsize=16)

ax1.set_ylabel('$u(x)$', fontsize=16)

ax1.set_title('Steady state diffusion solve', fontsize=16)

ax1.plot(x_nodes[1:], u, 'bo-', label='FE solution')

x_fine = np.linspace(0, 1, 1000)

ax1.plot(x_fine, u_exact(x_fine), 'k-', label='exact solution')

ax1.legend(loc='best', fontsize=16);

print('error (RMS at nodes only) = ',

sl.norm(u - u_exact(x_nodes[1:]) )

/np.sqrt(len(u)) )

error (RMS at nodes only) = 1.2513816881060796e-15

Homework - Solving on a non-uniform mesh#

We saw that for the initial BVP problem we considere in the class (homogeneous Dirichlet conditions with \(f\equiv 1\)) that the solution we obtained with exact (to round-off) at the nodes.

Confirm that this exactness is no longer true on a non-uniform mesh - try perturbing our internal nodes (i.e. keep the boundaries where they should be!) a small random amount.

Homework - A BVP with an inhomogeneous RHS and inhomogeneous Dirichlet BCs#

Consider the problem

with

and BCs

First derive the exact solution (integrate up twice and use the BCs to fix the constants of integration), and then use this solution to verify an appropriately implemented FEM solution.

Homework - Another BVP with a slightly more complex RHS#

Consider the problem

Integrate up twice to obtain the exact solution with two arbitrary constants of integration.

Consider the domain and use the the boundary conditions

to fix the values of the two constants in your exact solution.

Solve the problem using the finite element method and compare with your exact solution.

Homework - A more complex BVP (method of manufactured solutions)#

Consider the solution function

Verify that \(u(0)=0\) and \(u(1)=0\) for this function.

Compute

Solve the problem

with the \(f\) you’ve calculated on the interval \([0,1]\), with homogeneous Dirichlet BCs.

If you computed \(f\) correctly, and implemented your solver correctly, you should be able to demonstrate convergence to the exact solution we started with.

[Hint: the solver for this problem involve use of the discretisation matrix \(A\) we’ve used multiple times for simple BVPs, along with the mass matrix \(M\)].

Homework - A problem with non-constant coefficients [\(\star\star\)]#

The next step in complexity is thinking about how we would go about solving problems with non-constant coefficients.

The added complexity here is that we need to incorporate the variation of coefficients when we compute the entries of the LHS discretisation matrices

For example consider the general diffusion like problem

where \(a\equiv a(x)\) is a potentially non-constant diffusion coefficient.

Consider diffusion of heat in a bar where \(f(x)\) represents a hear source and \(a(x)\) represents the heat conductivity.

Suppose we are in the interval \(I=[0,1]\) with the completely general Robin boundary conditions

The parameters \(C_L\) and \(C_R\) can be used to control the relative contributions of the Dirichlet and Neumann conditions, with \(g_L\) and \(g_R\) the applied value of the BC. For example, in the limit of \(C\) being small we recover a homogeneous Neumann condition (and we are free to choose the \(C\)’s to be zero of course), and in the large limit we approximate a Dirichlet BC. Note that this means if we implement a general solver with these Robin BC, through the choice of \(C\) very large or very small (or actually zero), we approximate the implementation of these BCs.

Try developing a solver for this case and apply it to a problem where the diffusivity is given by

the source by

and with the BCs

Homework - Approximation using a discontinuous function [\(\star\)]#

We saw in the lecture how to approximate a given function using a p/w linear continuous function via \(L^2\) projection (which involved deriving the system with the mass matrix and solving for the weights in our expansion in terms of the basis functions).

We can generalise this to a case where we approximate our given function with a p/w linear discontinuous function. This essentially means that instead of having one unknown at every node, we actually have two, each of which “belongs” to the element either side of the mesh vertex.

We thus have more degrees of freedom to solve for (so we expect an improved solution), and need to think about the appropriate set of test functions to use to obtain the appropriately sizes square discrete system to solve. To do this we simply split our basis functions so each of them is only half of the hat function.

Suggest you take a look at my solution and try to follow what is going on.

Homework - Errors in function approximation [\(\star\)]#

Now compute the \(L^2\) norms of the errors in the three different approximations from the previous question.

Comments#

So our solver is 4th order accurate in terms of the nodal values, while it is second order accurate in the norm we really care about here - the L2 norm (which we approximate via RMS evaluated in a fine mesh).